Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function

Related Articles: Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function

Introduction

With great pleasure, we will explore the intriguing topic related to Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function

- 2 Introduction

- 3 Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function

- 3.1 PySpark RDDs: The Foundation of Distributed Data Processing

- 3.2 The map Function: A Transformation for Element-wise Operations

- 3.3 Benefits of Using map in PySpark RDDs

- 3.4 Practical Applications of the map Function

- 3.5 Understanding the Importance of map in Spark Processing

- 3.6 Frequently Asked Questions (FAQs) About PySpark RDD map

- 3.7 Tips for Effective Use of map in PySpark RDDs

- 3.8 Conclusion

- 4 Closure

Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function

In the realm of big data processing, Apache Spark stands as a powerful and versatile framework. At its core lies the Resilient Distributed Dataset (RDD), a fundamental data structure that enables efficient and scalable computations. This article delves into the crucial transformation function map within the PySpark RDD ecosystem, shedding light on its mechanics, applications, and significance in data manipulation.

PySpark RDDs: The Foundation of Distributed Data Processing

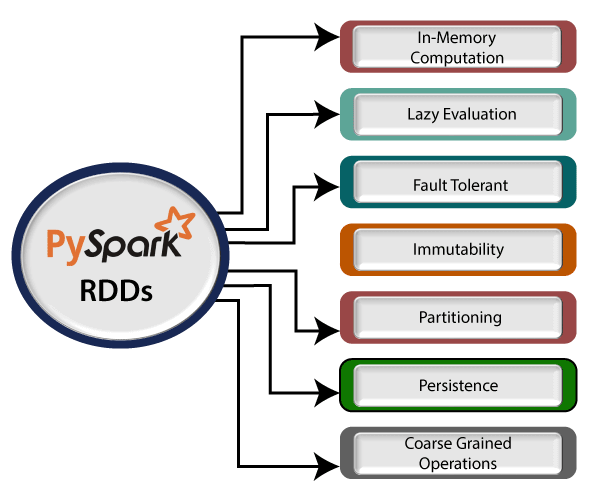

RDDs, or Resilient Distributed Datasets, are the cornerstone of Spark’s processing paradigm. They represent immutable, distributed collections of data that can be processed in parallel across a cluster of machines. This distributed nature allows Spark to handle massive datasets with remarkable efficiency, making it a popular choice for various data-intensive tasks.

Key Characteristics of RDDs:

- Immutable: Once created, an RDD cannot be modified directly. Changes are achieved through transformations, which generate new RDDs.

- Distributed: Data is partitioned and stored across multiple nodes in a cluster, enabling parallel processing.

- Fault-tolerant: In case of node failures, Spark automatically recovers data from other nodes, ensuring data integrity.

- Lazy Evaluation: Transformations are not executed immediately but rather when an action is triggered, optimizing resource utilization.

The map Function: A Transformation for Element-wise Operations

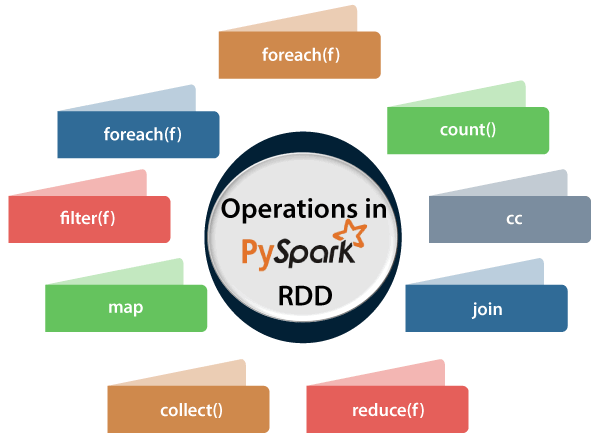

The map function is a fundamental transformation in PySpark RDDs, allowing users to apply a user-defined function to each element of an RDD, resulting in a new RDD with transformed elements. This function serves as a powerful tool for various data manipulation tasks, including:

- Data Cleaning and Preprocessing: Transforming data into a consistent format, removing outliers, or handling missing values.

- Feature Engineering: Creating new features from existing ones, such as extracting relevant information from text data.

- Data Enrichment: Adding contextual information to each data point, such as location data or timestamps.

- Data Aggregation: Preparing data for subsequent aggregation operations, such as calculating sums or averages.

Understanding the map Function:

The map function takes two arguments:

- A user-defined function: This function defines the transformation to be applied to each element.

- An RDD: This is the input RDD containing the elements to be transformed.

The function iterates through each element of the input RDD, applies the user-defined function to it, and generates a new RDD with the transformed elements.

Example:

# Create an RDD with numbers

numbers_rdd = sc.parallelize([1, 2, 3, 4, 5])

# Define a function to square each element

def square(x):

return x * x

# Apply the map function to square each element

squared_numbers_rdd = numbers_rdd.map(square)

# Collect the transformed elements

squared_numbers = squared_numbers_rdd.collect()

# Print the squared numbers

print(squared_numbers) # Output: [1, 4, 9, 16, 25]In this example, the map function applies the square function to each element of the numbers_rdd, creating a new RDD (squared_numbers_rdd) containing the squared values.

Benefits of Using map in PySpark RDDs

The map function offers several advantages in PySpark RDD processing:

- Parallelism: The transformation is applied concurrently across multiple nodes, significantly speeding up data processing.

-

Scalability:

mapeffectively handles large datasets, enabling efficient processing on distributed clusters. - Flexibility: The user-defined function allows for a wide range of transformations, making it adaptable to various data manipulation scenarios.

-

Code Clarity: The

mapfunction provides a concise and readable way to express element-wise operations, enhancing code maintainability.

Practical Applications of the map Function

The map function finds numerous applications in real-world data processing scenarios. Here are some illustrative examples:

- Data Cleaning: Removing unwanted characters, converting data types, or handling missing values using custom functions.

- Text Processing: Extracting keywords, performing sentiment analysis, or stemming words using libraries like NLTK.

- Feature Engineering: Creating new features from existing ones, such as extracting time features from timestamps or calculating ratios between variables.

- Data Enrichment: Adding contextual information to each data point, such as location data, weather information, or demographic data.

Example:

# Create an RDD with customer data

customer_data_rdd = sc.parallelize([

("Alice", 25, "USA"),

("Bob", 30, "UK"),

("Charlie", 28, "Canada")

])

# Define a function to add a "Country Code" feature

def add_country_code(data):

country, age, location = data

if location == "USA":

country_code = "US"

elif location == "UK":

country_code = "GB"

else:

country_code = "CA"

return (country, age, location, country_code)

# Apply the map function to add the "Country Code" feature

enriched_customer_data_rdd = customer_data_rdd.map(add_country_code)

# Collect the enriched data

enriched_customer_data = enriched_customer_data_rdd.collect()

# Print the enriched customer data

print(enriched_customer_data)In this example, the map function applies the add_country_code function to each element of the customer_data_rdd, adding a "Country Code" feature to each customer record.

Understanding the Importance of map in Spark Processing

The map function plays a pivotal role in Spark’s data processing pipeline. It serves as the foundation for various transformations, enabling efficient and scalable data manipulation. By applying user-defined functions to each element of an RDD, map empowers developers to perform a wide range of operations, from data cleaning and preprocessing to feature engineering and data enrichment.

Frequently Asked Questions (FAQs) About PySpark RDD map

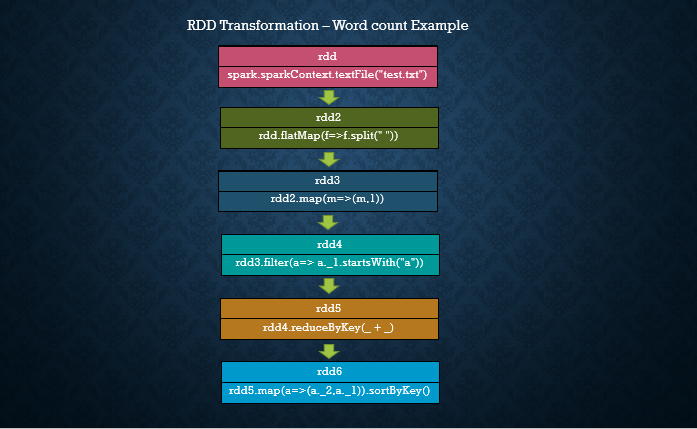

1. What is the difference between map and flatMap in PySpark?

The map function applies a function to each element of an RDD, producing a new RDD with the same number of elements. In contrast, flatMap applies a function to each element and then flattens the resulting sequence into a single RDD. This allows for the creation of RDDs with a different number of elements than the input RDD.

2. How does map handle data partitioning in Spark?

The map function operates on each partition of the input RDD independently. This ensures parallelism and efficient processing across the cluster. The output RDD will have the same number of partitions as the input RDD.

3. Can map be used with multiple input RDDs?

No, map is designed to operate on a single input RDD. For operations involving multiple RDDs, you can use functions like join, cogroup, or union.

4. What are some common pitfalls to avoid when using map?

- Incorrect Function Definition: Ensure the user-defined function correctly transforms the input element and returns the desired output.

- Data Type Mismatches: Verify that the data types of the input and output elements are compatible.

-

Performance Bottlenecks: Avoid complex or computationally intensive functions within

mapto prevent performance degradation.

5. Is map suitable for all data manipulation tasks?

While map is a versatile function, it may not be the most efficient choice for all tasks. For example, if you need to perform aggregations or group operations, other functions like reduceByKey or aggregateByKey might be more appropriate.

Tips for Effective Use of map in PySpark RDDs

- Optimize Function Performance: Minimize the computational complexity of the user-defined function to maximize processing speed.

- Consider Data Partitioning: Choose an appropriate number of partitions for the input RDD to optimize parallelism and resource utilization.

-

Use

flatMapfor Flattening: If you need to flatten the output of the user-defined function, useflatMapinstead ofmap. -

Explore Alternatives: For specific tasks, consider using other functions like

reduceByKey,aggregateByKey, orfilterfor better efficiency.

Conclusion

The map function in PySpark RDDs is a fundamental transformation that enables efficient and scalable data manipulation. It allows users to apply custom functions to each element of an RDD, making it a versatile tool for a wide range of data processing tasks. By understanding the mechanics and benefits of map, developers can leverage its power to perform data cleaning, feature engineering, data enrichment, and other essential operations within the Spark ecosystem.

Closure

Thus, we hope this article has provided valuable insights into Understanding the Power of Transformations: A Deep Dive into PySpark RDDs and the map Function. We appreciate your attention to our article. See you in our next article!